Integrating ODIN Voice Chat in Unreal using C++

Although the Unreal Engine plugin comes with full Blueprint support to make it as easy as possible to use ODIN in your game, it is also easily possible to implement the plugin in your C++ Unreal Project. Please make sure to have a basic understanding of how ODIN works as this helps a lot of understanding the next steps. Additionally this guide assumes that you have basic knowledge of the Unreal Engine, its Editor and the C++ API.

This guide highlights the key steps to take to get started with ODIN. For a more detailed implementation please refer to our Unreal Sample project. Copy the C++ classes in the Unreal Sample Project and paste them in your own project to get started quickly!

This guide refers to the ODIN Voice Chat Plugin v1.x. Sample Code for version 1.x can be found on the v1.x branch of the sample repository.

Basic Process

As outlined in the introduction, every user connected to the same ODIN room (identified by a string of your choice) can exchange both data and voice. An ODIN room is created automatically by the ODIN server as soon as the first user joins and is removed once the last user leaves.

To join a room, a room token is required. This token grants access to an ODIN room and can be generated directly within the client. While this approach is sufficient for testing and development, it is not recommended for production because it exposes your ODIN Access Keys to the client. In production environments, the room token should be created on a secured server. To support this, we provide ready-to-use packages for JavaScript (via npm) and PHP (via Composer). In addition, we offer a complete server implementation that can be deployed as a cloud function on AWS or Google Cloud.

Once the room has been joined, users can exchange data such as text chat messages or other real-time information. If voice communication is required, a microphone stream (referred to as capture media) must be added to the room. This enables every participant to communicate with one another. More advanced techniques, such as 3D audio, allow users to update their spatial positions at regular intervals. The server then ensures that only nearby users hear the voice stream, reducing both bandwidth consumption and CPU usage. Details on these techniques will be discussed later.

Summary of the Basic Steps

- Obtain an access key.

- Create a room token using the access key and a specific room identifier (string identifier).

- Join the room with the generated room token.

- Add a capture media stream to connect the microphone to the room.

Implementing with C++

To integrate ODIN into an existing (or new) Unreal Engine project, you will work with the C++ classes provided in the Odin and OdinLibrary modules of the Odin Plugin. After installation, these modules must be added to your project’s build file, located at:

Source/<YourProject>/<YourProject>.build.cs

In addition, you should include dependencies on Unreal Engine's AudioCapture and AudioCaptureCore modules, as these provide the functionality required to capture microphone input from the user. Your PublicDependencyModuleNames entry should therefore look as follows:

Overview

Below is the full class we are about to create. In the sample, it derives from UActorComponent, but you can place the code wherever it best fits your architecture. An UActorComponent is often a good choice because it can be attached to any AActor to add functionality in a modular way.

Header File:

Source File:

You can attach this component to any actor, but the local Player Controller is typically the most appropriate location: it exists exactly once per client and is owned by that client, which aligns well with per-user voice operations. Ensure the hosting actor is present on every client; for example, the GameMode only exists on the server and is therefore not suitable.

In the following sections, we will build this component step by step and explain the reasoning behind each part.

Creating the Component

First, create the component class in C++. The simplest path is via the Unreal Editor:

- Open your project in the Unreal Editor.

- Navigate to

Tools → New C++ Class…. - Select

Actor Componentas the parent class (this guide assumesUActorComponent, but choose whatever best fits your project). - Name the class (for example

OdinClientComponent) and set it toPublic. - Click

Create.

The IDE (e.g., Visual Studio) will open and the project files should regenerate automatically. If they do not, return to your .uproject, right-click it in Explorer/Finder, and select Generate Visual Studio project files….

Once the class opens in your IDE, you can begin implementing the logic. Ensure your .build.cs already includes the required ODIN and audio modules as described above. This avoids "missing symbol" issues when you start adding ODIN types and audio capture code.

Creating an Access Key

Before you can connect to ODIN, you need to create an access key. An access key authenticates your requests to the ODIN server and contains your subscription-specific information, such as the maximum number of concurrent users allowed in a room and other configuration settings. A free access key allows up to 25 users to join the same room. For larger capacities or production use, you will need to subscribe to one of our paid tiers. See the pricing page for details.

For an in-depth explanation of access keys, refer to the Understanding Access Keys guide.

Creating a Demo Key

For now, you can generate a demo access key suitable for up to 25 concurrent users using the widget below:

Click on the button to create an access key that you can use for free for up to 25 concurrent users.

Click Create Access Key and store the key securely. You will need it later when joining a room and exchanging data or voice streams. A demo access key can later on be upgraded to a full-access key.

Creating a Room Token

For this example, we will create the room token directly on the client, inside the component’s BeginPlay() function. In most real-world use cases you may not want players to automatically join voice chat as soon as the game starts. Typically you would trigger this with a specific gameplay event or user action. However, for testing purposes, BeginPlay() provides a convenient entry point.

To begin, define a Token Generator and a String as instance variables in your header file, and then call the GenerateRoomToken() function. Make sure to also include the relevant ODIN header files in order to access the required functionality:

And initialize properties in the BeginPlay() function:

In production, the room token should always be generated on a secure backend (e.g., a cloud function) and delivered to the client on demand. For testing, generating a token directly in the client is acceptable, but do not commit your access key to a public repository.

Both RoomName and UserName serve as placeholders. In practice, you will want to integrate these with your game's logic for assigning users to rooms and identities. For testing, it is enough that all clients use the same room name and generate the room token with the same access key in order for them to connect to the same ODIN room.

Similarly, replace <YOUR_ACCESS_KEY> with either your free access key or your own logic for securely reading the key from a local configuration file.

Configure the Room Access

When joining a room, ODIN supports a variety of settings through APM settings. These control features such as Voice Activity Detection and several other audio-processing options.

To begin, create a new FOdinApmSettings object as an instance variable. In your component's BeginPlay() function, assign the initial values that make sense for your game. After that, construct a UOdinRoom using these settings and keep it in an instance variable so you can manage it later.

You are encouraged to experiment with the available APM settings to find the configuration that best fits your gameplay style and audio requirements. For example, some games may benefit from aggressive noise suppression, while others may prefer to keep the voice stream more natural.

The setup should look like this:

Header:

Source:

Event Flow

Once your client is connected to the ODIN server, several events will be triggered that enable you to set up your scene and connect audio output to player objects. Understanding and handling these events correctly is essential for a functioning voice integration.

The following table outlines the flow of a basic lobby application. Events that must be implemented are emphasized.

| Step | Name | Description |

|---|---|---|

| 1 | Join Room | The user navigates to the multiplayer lobby. All players currently in the lobby are connected to the same ODIN room, allowing them to coordinate before playing. The application calls Join Room. Note: The ODIN server automatically creates the room if it does not already exist. No bookkeeping is required on your end. |

| 2 | Peer Joined - already connected peers | Before the On Room Joined event is triggered, you will receive PeerJoined and MediaAdded events for each peer and their medias that are already present in the room. Keep this in mind when setting up your gameplay logic. |

| 3 | Room Joined | The On Room Joined event is triggered, giving you the opportunity to run logic on the client as it joins. |

| 4 | Peer Joined - new peers | For each user joining the room, an On Peer Joined event is fired. This event provides the peer's UserData, which you can use to share the user's identity or state in your client application directly with ODIN. |

| 5 | Media Added | For each user with an active microphone stream, an On Media Added event is triggered. While previously discussed events can be optional, this event should most likely be handled by your application. In the callback, assign the media object via Odin Assign Synth Media to an Odin Synth Component, so audio can be played back in the scene. |

| 6 | Media Removed | When a user disconnects or closes their microphone stream, an On Media Removed event is triggered. This event should be used to destroy or otherwise clean up Odin Synth Components that have been previously connected to the media. |

| 7 | Peer Left | When a user leaves the room, the On Peer Left event is fired. This can be used, for example, to show a notification. Important: When a peer leaves, all of their media streams are removed as well, and corresponding On Media Removed events are triggered for each stream. |

This event sequence forms the backbone of how ODIN synchronizes users and audio streams in real time. By correctly handling these callbacks, you ensure that your voice chat remains stable, synchronized, and responsive as players join, speak, or leave. |

Adding a Peer Joined Event

To handle ODIN events, create functions that you bind to the corresponding event delegates. In this guide we will connect the three core events used during initial setup: onPeerJoined, onMediaAdded, and the Room-Joined-onSuccess callback.

Because these are dynamic multicast delegates (usable in Blueprints), the callback functions must be declared with the UFUNCTION() macro. The onSuccess callback is invoked via the class's own delegates and therefore require additional instance variables of the respective delegate types.

Header:

We bind these handlers early, typically in BeginPlay(), so that no events are missed.

Source:

Next, implement the functions. For this walkthrough we start with OnPeerJoinedHandler. In production you would usually parse and use the peer's UserData (e.g., display name, team, avatar). For now, we keep it simple and log the join event:

Always set up and bind your event handlers before joining a room. This ensures you receive PeerJoined and MediaAdded events for users who are already present when your client connects.

The On Media Added Event

The onMediaAdded event is fired whenever a user in the room first connects their capture stream (in most cases, this will be connected to a microphone). Immediately before joining a room, you will also receive this event for all peers who already have active capture streams.

The event provides a UOdinPlaybackMedia object representing the other peer's microphone input. This playback media object will provide access to a real-time audio stream (a sequence of float samples). To play it back, you need an audio component that converts this stream into audible output: the Odin Synth Component. Use UOdinSynthComponent::Odin_AssignSynthToMedia() to connect the synth to the UOdinPlaybackMedia object. This establishes the playback path. After assigning, make sure to activate the component so audio is actually produced.

For full 3D voice chat, the recommended approach is to add an Odin Synth Component to each player character or pawn and position it near the character's head. At runtime, you can retrieve the correct instance with GetComponentByClass() based on which peer the Media belongs to. This ensures accurate spatialization and attenuation. You wil need to keep track of which Synth Component is connected to which peer, e.g. by using a TMap.

To keep this guide focused, we will ignore 3D spatialization and provide 2D output only. In that case, you can call AActor::AddComponentByClass() at runtime to create and attach an Odin Synth Component to the locally controlled player character. This avoids the need to resolve which character corresponds to the ODIN peer and simplifies initial setup.

That's it. With this setup, every user connected to the same room will be heard at full volume through the local player’s position.

In a real 3D game you would not route all audio through the local player. Instead, you would map the ODIN Peer ID to your Unreal Player's Unique Net ID and assign each Playback Media stream to the correct player character. This way Unreal's audio engine automatically applies spatialization and attenuation, i.e. reducing the volume as players move farther away from the listener.

If you would like to see a practical example of how to correctly connect playback streams to player pawns, refer to our Minimal Multiplayer Sample Project.

Joining a Room

Now we have everything in place to join a room. A room token with the ID RoomName has been created, and the room settings for our client have been configured. The final step is to connect both and initiate the join.

Since the join requires waiting for the ODIN server to respond, it is handled as an asynchronous task. The implementation was designed with Blueprint usability in mind, but it can just as easily be called from C++ by constructing the task and then invoking its Activate() function. To keep this guide simple, we will not provide any additional UserData:

If the join fails, the OnOdinErrorHandler() function will be called. It provides an error code that can be passed to UOdinFunctionLibrary::FormatError() and then logged for easier debugging:

In this step, we pass the Odin Room together with its APM settings, the default ODIN gateway URL, the local room token, empty user data, an identity vector as the initial position, and the two delegates for handling success and error events. With this, the client will attempt to join the room and respond to either outcome.

The default gateway used here is located in Europe. If you'd like to connect to a gateway in another region, please take a look at our available gateways list.

Adding a Media Stream

Now that we have successfully joined a room, we need to add our capture stream (microphone) so that other users in the room can hear us. This is done by creating an Odin Audio Capture object by calling UOdinFunctionLibrary::CreateOdinAudioCapture(). Then we create a new capture media object with a call to UOdinFunctionLibrary::Odin_CreateMedia() and providing the audio capture object as the input parameter. Once that is prepared, the asynchronous task UOdinRoomAddMedia::AddMedia() is used to attach the capture stream to the room. As before, we declare the necessary delegates and pass them along with the call.

Since capturing requires keeping the capture object alive, we keep the Audio Capture object in an instance variable. This also allows us to start and stop microphone input whenever needed. By using the SetIsMuted function, it becomes trivial to implement features like mute or push-to-talk later on.

Header:

Source:

You can reuse the same error handler for OnAddMediaError that you bound to OnRoomJoinError. It provides an error code as well, which you can format and log in the same way.

Make sure to call StartCapturingAudio() only after you have successfully created the audio capture with CreateOdinAudioCapture(), constructed the media with Odin_CreateMedia(), and added it to the room using AddMedia().

Testing with ODIN Web Client

Since ODIN is fully cross-platform, you can use the ODIN web client to connect users in a browser to your Unreal-based game. This is useful for many scenarios (see the Use Cases Guide), and it is also a very practical tool during development.

For testing purposes, you can use the following Test Web App: https://4players.app/. Configure the client to use the same access key that you already set up in Unreal. Click the Settings icon in the center, then switch to Development Settings. You should see a configuration dialog similar to this:

Enter the access key you created and saved earlier and make sure the gateway is identical to the one used in Unreal (gateway.odin.4players.io, do not prefix with https:// in the Test Web App). The Test Web App will now use the same access key and gateway as your Unreal project, allowing both platforms to connect to the same ODIN room.

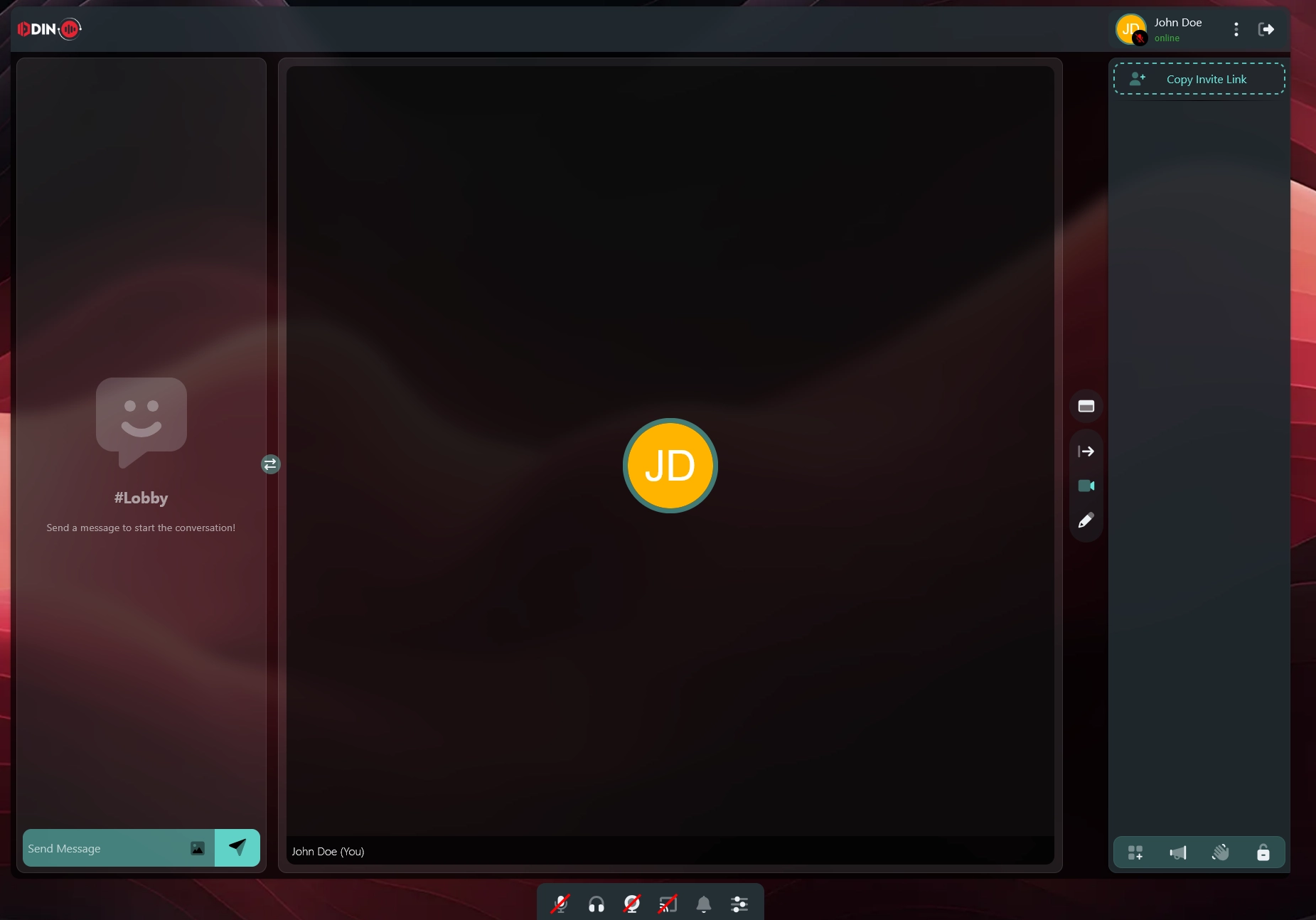

In the connection dialog, set the same room name that you used in Unreal (for example RoomName) and provide any display name. Do not enter a password. Then click Join Room.

Once connected, the client should look like this:

Now switch back to you IDE and let your project compile, then open up the Unreal Editor. When starting Play In Editor, your game will join the same ODIN room as the browser client. In the web client, you should hear a notification sound indicating another user has joined. In the browser, you will see a new entry in the user list called Unknown. If you speak into your microphone, you should hear yourself.

For additional testing, ask a colleague or friend to set up the ODIN client with your access key and the same room name. This way you can chat together, one user inside the game and the other in their browser. This workflow is a quick and reliable way to validate your setup, and it is something we regularly use internally at 4Players.

Enabling 3D Audio

So far, we have enabled voice chat in the application, but most likely you will also want to make use of Unreal's 3D audio engine. Enabling this is straightforward: you can assign Attenuation Settings directly to your Odin Synth Component. However, there is another important consideration: making sure that the Odin Synth Components are positioned correctly in the scene.

The simplest approach is to attach Odin Synth Components to the Pawns that represent the respective players. To do this reliably, you need to track which Odin Peer is associated with which player. Let's take a closer look at how to achieve that.

The exact implementation depends on your networking and replication system. In most cases you will be using Unreal Engine's native networking, so we will assume that setup here. If you are using a different system, you will need to adapt the approach to fit your networking solution.

Creating the Necessary Classes

The goal of the next steps is to map each Odin Peer Id to the corresponding Character in the scene so that every Odin Media Stream can be assigned to the correct Actor. To achieve this we need to create two additional classes alongside the existing UOdinClientComponent.

First, we create a new class derived from Unreal's ACharacter. Each ACharacter exists on all clients, and there is one instance for every connected player (including a possible ListenServer). This makes it the ideal place to replicate a player's ID from the server to all clients. In this guide, the class is called AOdinCharacter. Don't forget to update your Game Mode to reference this class so it will be used in your project. Alternatively, you can derive your current Default Player Character class from AOdinCharacter to retain existing functionality. In the sample project, the Blueprint BP_ThirdPersonCharacter was simply reparented to AOdinCharacter for this purpose.

Next, we create a class derived from UGameInstance to hold the mapping. A Game Instance object exists on each client but is never replicated, making it suitable for storing and maintaining per-client data. In this guide, the derived class is called UOdinGameInstance.

After creating these two classes and regenerating the Visual Studio project files, you can begin implementing the logic to connect Odin peers with their corresponding characters.

Creating the Player Character Maps

To assign the correct Odin Synth Component to the appropriate player-controlled character, we need to track a unique identifier for each player, their characters, and their Odin Peer IDs.

In the UOdinGameInstance class, create two maps: one mapping the unique player ID to the player character (using an FGuid), and another mapping the Odin Peer ID to the player character (using an int64). The first map keeps track of which player character belongs to which player. When another Odin peer joins the voice chat room, you can then use this mapping to determine which peer ID corresponds to which player character.

Your header file should look similar to this:

Adjusting the Join Room Routine

Next, we will move the routine that joins an odin room from the BeginPlay() function of UOdinClientComponent into its own function. The reason is simple: the client must wait until it knows its own PlayerId, because that value needs to be sent as User Data in the Join Room call.

Move the code from BeginPlay() into a dedicated function and declare that function in the header as well. This function will take an FGuid which we pass as User Data.

Header:

To pass the PlayerId as User Data, use the UOdinJsonObject helper class from the Odin SDK. Create a UOdinJsonObject, add a String Field with the key PlayerId, and set the value to the GUID cast to FString. With EncodeJsonBytes() you can convert the JSON to a TArray<uint8>, which is what the JoinRoom action expects.

Source:

Propagating an Identifier

If you have not done so earlier, now is the time to attach the component to your Player Controller. For this sample we assume you have added UOdinClientComponent to your game's default PlayerController. You can do this either in C++ within your PlayerController class, or via the Blueprint Editor if your default controller is a Blueprint.

With that in place, we can propagate a per-player identifier for the current game session. You can use GUIDs or any existing unique player identifiers you already maintain (for example from a login flow). First, declare the corresponding replicated variable in C++ and configure the class for replication. We want each client to react as soon as PlayerId is replicated, so it can be added to the local map of player characters and player IDs. The header will look like this in the end:

Header:

Next, implement GetLifetimeReplicatedProps() to enable replication of PlayerId.

Source:

You can assign the ID at any appropriate point during startup. A good entry point is the BeginPlay function of the Character class. On the server, set the replicated variable so it propagates to all clients. Also add the newly created PlayerId to the Game Instance's PlayerCharacters map.

If this character is locally controlled, start the ODIN room join routine on the UOdinClientComponent.

Finally, implement OnRep_PlayerId(), which is invoked on each client once the PlayerId arrives. Here you perform the same bookkeeping as above, without creating a new GUID. Since the client now knows its PlayerId, it can start the ConnectToOdin() routine.

Handling the Peer Joined Event with an Identifier

With player identifiers in place, each client will receive an event when a player joins the ODIN room. In this handler, extract the player identifier from the provided user data. Because the Odin Media object arrives in a separate event without user data, you also need to establish a mapping from Odin Peer Id to your player characters. Once you resolve which character belongs to the newly joined peer, add that character to the new map using the passed Peer Id. The finished OnPeerJoinedHandler() will look like this:

Handling the Media Added Event with an Identifier

With the identifier mapping in place, you can now attach the Odin Synth Component to the correct player character. In your handler for the Media Added event, do not attach the component to the local player by default. Instead, resolve the target character using the Peer Id and your Game Instance's Odin Player Character map, then attach and activate the synth component on that character.

Conclusion

This is all you need to add Odin Synth Components to the correct player characters. From here, you can adjust the attenuation settings to whatever best fits your 3D world. The most straightforward approach is simply assigning an attenuation settings asset created in the Unreal Editor to your Odin Synth Component.

Because Odin itself is agnostic of the underlying audio engine, you are free to use the built-in Unreal Audio Engine or any third-party solution such as Steam Audio, Microsoft Audio, FMOD, or Wwise. All of these work by configuring your project and applying the appropriate attenuation settings to the Odin Synth Component.